Reviewed by K.S.Loganathan

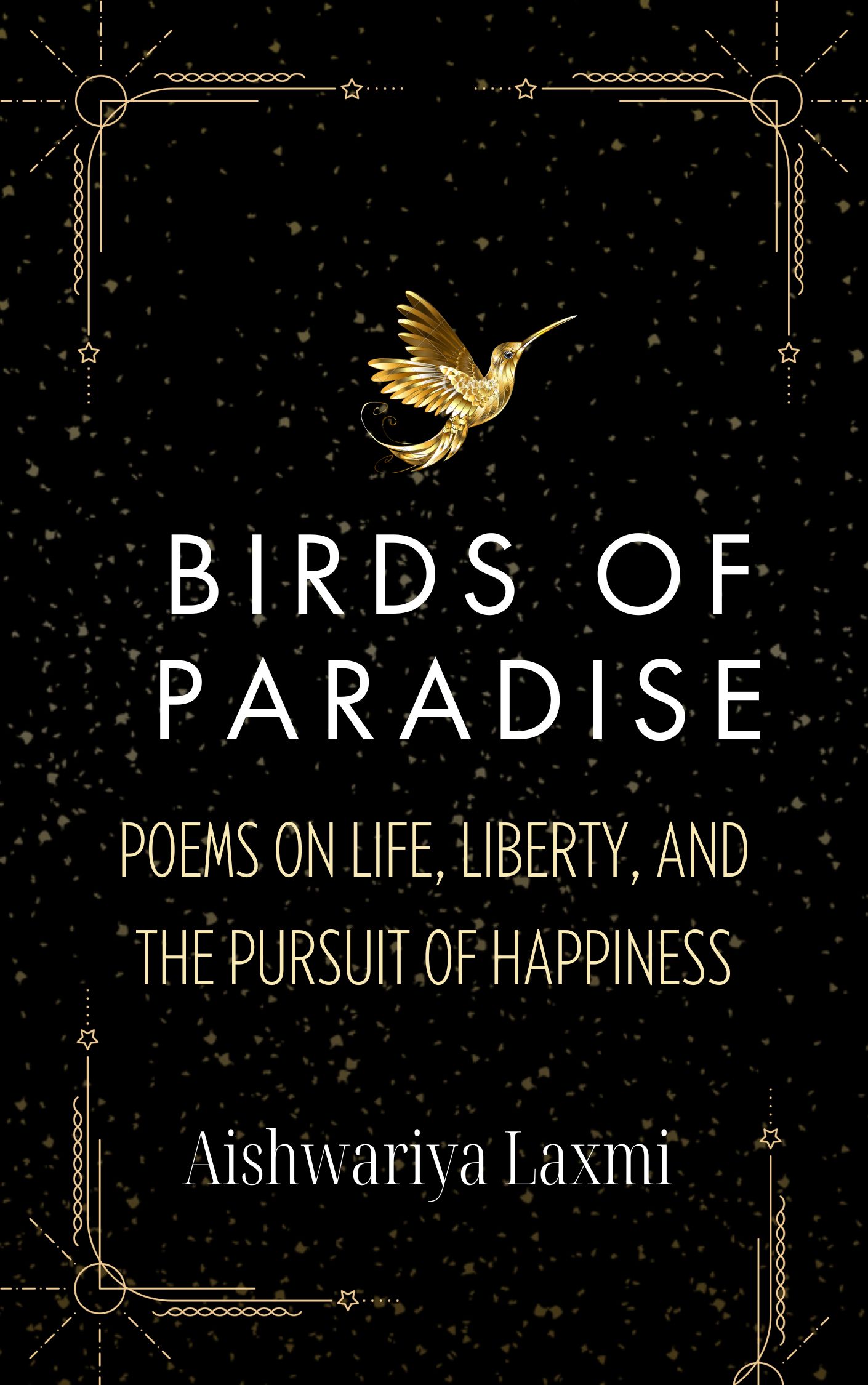

Publishers – Simon & Schuster, 2014.

Walter Isaacson is an American author, journalist, and professor well known for his biographies of Steve Jobs, Elon Musk, Benjamin Franklin, and Henry Kissinger. He is noted for his lectures on the intersection of the humanities and the sciences, and studies on modern American history and culture.

In this book, Isaacson outlines the history of the digital revolution, which combined the computer and distributed networks, just as steam generation and power machinery drove in tandem the Industrial Revolution. The open networks connected to personal computers democratized content creation and sharing, as the printing press did earlier, and enabled at the same time, a collaboration between humans and machines.# The book starts and ends with Ada Lovelace’s description of Charles Babbage’s prototype Analytical Engine and its potential uses in applications involving numbers and symbols that were logically related, through processes like algorithms, subroutines, and recursive loops. Although Babbage’s machine was never completed in his time, it is the forerunner of the modern digital computer.

Wartime exigencies drove the concept of a “Universal computer”, a Turing Machine that could be programmed to perform or simulate any logical task. The Harvard Computation Laboratory was turned into a naval facility, with a fully automatic, Mark 1 digital computer tasked with military problem-solving. British code breakers built a special-purpose, fully electronic, and digital(binary) computer, Colossus, in 1944. Women like Lt. Grace Hopper were at the vanguard of software development, translating nautical problems into mathematical equations. Most of the inventions of the time sprang from an interplay of creative individuals (like Mauchly, Turing, von Neumann, and Aitken) with teams that knew how to implement their ideas.

( Text follows after book cover pic)

A collaborative creative process was evident at Bell Labs, Los Alamos, Bletchley Park, and Penn. At Bell Labs, Walter Brittain, John Bardeen, and William Shockley pioneered the transistor which miniaturized computers that were the first example of technology making devices personal. The civilian space program, along with the military program to build ballistic missiles, drove the demand for both smaller more powerful computers and transistors. Other notable innovations of the time included computer circuitry and microchips, laser technology, and cellular telephony. New research organizational structures like collegial and entrepreneurial, services like venture capital, and new markets from new applications, were also an intrinsic part of the innovative process.

Video games arose from the idea that computers should interact with people in real time, have intuitive interfaces, and feature delightful graphic displays. The internet was built on a troika of the military, universities, and private corporations to form what is now known as the “military-industrial complex”. The war had established beyond dispute that Vannevar Bush’s thrust on basic science- discovering the fundamentals of nuclear physics, laser, computer science, radar, etc.,- led to practical applications that were essential to national security.

However, through the internet, innovations could be crowd-sourced and open-source. J.C.R.Licklider and Bob Taylor realized that online community formation and collaboration would be facilitated by the internet; the dystopian community envisaged in 1984 by George Orwell failed to materialize as personal computers by then had empowered individuals far more than Big Brother ever could.# Bill Gates and Paul Allen set about creating the software for personal computers and licensing it; the hacker culture dwindled in the mid-1980s with the advent of proprietary software and password systems that discouraged community sharing. Tim Berners-Lee, the English computer scientist, proposed an information management system in 1989 which took shape as the World Wide Web in 1993. Wikipedia put information a click away from the public, and Google, designed as a scalable search engine, appeared in 1998. The melding of machine and human intelligence to deal with information overload had truly begun.

My Views

The works, machinations, lives, and times of the digital pioneers and Silicon Valley entrepreneurs are laid out in engaging detail much like a Harold Robbins novel that covered Hollywood and the Crime of that era. The importance of teamwork in the creative process is well brought out, as are conflicts of interest in “intellectual property” and open-source innovations. The current trend is to follow a more proprietary approach. There are copious references for each chapter. Isaacson thrives at the interplay of the humanities and the sciences. In a field that is expanding rapidly, the push for innovation and tech-driven growth in artificial intelligence and machine learning since the publication of the book has been gathering momentum, and the reader interested in these developments may read his or others’ later writings. The book is U.S.-centric, and parallel developments in the field outside the U.S. and the U.K. are not mentioned at all. It is a standard reference book for the history of the information age, with insightful comments on how innovation happens.